I have to apologize in advance for the delay its taken me to publish my thoughts on OpenWorld this year. I was pretty busy this year and each night I pretty much collapses once I arrived back at the hotel. I’ll try to get these out over the next couple of days.

-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=

The day started with a Keynote by Tom Kilroy and I have to say I really enjoyed it. In retrospect, IMHO, it was probably the best one of the week. Mr. Kilroy talked about how connected our world was becoming, the number of devices connected to the internet and the amount of traffic that was being generated. Since the internet ‘began’ almost 150 Exabytes of data has traversed the net. In 2010 alone its 175 Exabytes which brings the total to 325 Exabytes since the inception of the internet. With an estimated 10 billion new devices connected by 2015 and the explosive popularity of video that amount of data is going to be staggering. Mr. Kilroy continued by talking about how to structure that data, making it more relevant and less time consuming to find what you want.

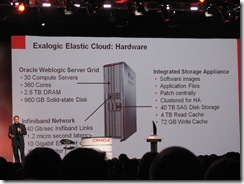

Thomas Kurian was next on stage where he talked about cloud computing, which seemed to be the primary focus of many vendors both in keynotes and the exhibition floor. I remember years ago Oracle talked about using many cheap x86 computers in a grid computing environment instead of huge SMP systems but I guess the reality is that those systems are hard to maintain. Now Oracle has Exalogic + Exadata, where a few high end, tightly integrated reliable systems deliver outstanding performance and scalability.

Thomas Kurian also talked about systems management and how you can use Business Performance Indicators (BPI) to give you a better view than CPU, DISK, etc as to how your system is performing. During the demo, the root cause of a performance issue was determined to be a CPU bottle neck. I find this interesting because I would have been paged shortly after the CPU maxed if that was my environment. The BPI approach is pretty much the opposite of how most DBA's work today.

The first session I attended was

Explaining the Explain Plan (S316955) and I have to say I really enjoyed this session. So much material was covered that I couldn’t possible do it justice in a few lines so you should definitely download this presentation. You can also follow the Opitmizer teams blog at

http://blogs.oracle.com/optimizer/. A second presentation that afternoon built on the material covered here, S317019 but unfortunately it was full. A friend of mine attended and said it was good as well.

In a nutshell this presentation covered what an execution plan was, how to generate it, definitions around what is a good plan, cost, performance, cardinality, etc. Causes of incorrect cardinality estimates by the optimizer and their solutions. Access paths, how the optimizer chooses which one to use and situations where it can choose the wrong path. Join types, causes of incorrect joins and situations where the join orders are wrong. To drive the points home she included examples and asked the audience questions.

My next session was

Tuning All Layers of the Oracle E-Business Suite Environment S317108. This session was very good as well and was broken up into the various layers such as database tier, applications tier, concurrent manager, etc. What I liked about this session was that they talked about some common performance problems, their causes and suggestions on how to resolve them or how to gather the right information to send to Oracle support.

Another good session on Tuesday was

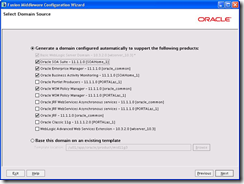

Oracle Fusion Middleware 11g: Architecting for Continuous Availability S317391. This session talked about how to reduce the impact of both planned and unplanned outages. How to upgrade your deployed applications and apply minor Weblogic patches with no downtime. A good review of HA features was in there as well. Since I am new to Weblogic this provided me with a good overview but for those experienced there many not be much here for you.

The last session I attended was

SQL Tuning for Smarties, Dummies, and Everyone in Between S317295. Arup Nanda talked about the typical challenges DBA’s face with SQL tuning, from ‘queries from hell’ to dealing with data that evolves over time. Jagan Athreya continued the presentation by talking about the new features of 11g and 11gR2.

One of the features that caught my eye was the ability in 11gR2 to save all the metadata related to a particular SQL statement as an interactive report. It looks like it contains all the information you would need to identify who is executing it, bind variables, explain plan and metrics.

Another feature also in 11gR2 is the ability to monitor PL/SQL, so you can figure out where PL/SQL blocks are spending most of their time. The session continues to talk about the common problems that cause SQL to go bad (optimizer stats, application issues, cursor sharing, etc) and how these new database features can help you.

Overall, Tuesday had some great sessions.